iMX8MPlus和iMX8QM机器学习框架eIQ性能对比

By Toradex 胡珊逢

机器学习算法对算力要求较高,通常会采用 GPU ,或者专用的处理器如 NPU 进行加速运算。NXP 先后推出的两款处理器iMX8QuadMax 和 iMX8M Plus 分别可以采用 GPU 和 NPU 对常用的机器学习算法例如 TensorFlow Lite 等进行加速。文章将使用 NXP eIQ 框架在两个处理器上测试不同算法的性能。

这里我们将使用 Toradex 的 Apalis iMX8QM 4GB WB IT V1.1C 和 Verdin iMX8M Plus Quad 4GB WB IT V1.0B 两个模块。BSP 为 Linux BSP V5.3 。eIQ 采用 zeus-5.4.70-2.3.3 版本。Toradex 默认 Yocto Project 编译环境并没有直接集成 eIQ 软件,可以参考这里添加 meta-ml layer 并进行编译。然后修改 meta-ml/recipes-devtools/python/python3-pybind11_2.5.0.bb 中的Python 版本为 3.8 。最后可以生成 multimedia image。

-------------------------------------

EXTRA_OECMAKE = "-DPYBIND11_TEST=OFF \ -DPYTHON_EXECUTABLE=${RECIPE_SYSROOT_NATIVE}/usr/bin/python3-native/python3.8 \ "

-------------------------------------

使用 Toradex Easy Installer 将生成的镜像安装到 Apalis iMX8QM 4GB WB IT V1.1C 和 Verdin iMX8M Plus Quad 4GB WB IT V1.0B 两个模块上。

测试的内容参考 NXP 的 i.MX_Machine_Learning_User's_Guide 文档进行,包括 TensorFlow Lite、Arm NN、ONNX、PyTorch。由于目前 OpenCV 还只能运行在 iMX8QuadMax 和 iMX8M Plus 的 CPU 上,无法使用 GPU 或者 NPU 加速,所以本次不做测试。另外,在使用 Arm NN 测试 Caffe 模型时有两个限制。第一,batch size 必须为 1。例如 deploy.prototxt 文件修改为

-------------------------------------

name: "AlexNet"

layer {

name: "data"

type: "Input"

top: "data"

input_param { shape: { dim: 1 dim: 3 dim: 227 dim: 227 } }

}

-------------------------------------

第二, Arm NN 不支持所有的 Caffe 语法,一些老的神经网络模型文件需要更新到最新的 Caffe 语法。下面是 PC 上用于转换的 Python3 脚本。

-------------------------------------

import caffe

net = caffe.Net('lenet.prototxt', 'lenet_iter_9000-orignal.caffemodel', caffe.TEST)

net.save('lenet_iter_9000.caffemodel')

-------------------------------------

在两个模块上测试结果如下。

TensorFlow Lite

l Apalis iMX8QM

label_image

-------------------------------------

root@apalis-imx8:/usr/bin/tensorflow-lite-2.4.0/examples# USE_GPU_INFERENCE=1 ./label_image -m mobilenet_v1_1.0_224_quant.tflite -i grace_hopper.bmp -l labels.txt -a 1

INFO: Loaded model mobilenet_v1_1.0_224_quant.tflite INFO: resolved reporter INFO: Created TensorFlow Lite delegate for NNAPI. INFO: Applied NNAPI delegate. INFO: invoked INFO: average time: 12.407 ms INFO: 0.784314: 653 military uniform INFO: 0.105882: 907 Windsor tie INFO: 0.0156863: 458 bow tie INFO: 0.0117647: 466 bulletproof vest INFO: 0.00784314: 668 mortarboard

-------------------------------------

benchmark_model

-------------------------------------

root@apalis-imx8:/usr/bin/tensorflow-lite-2.4.0/examples# ./benchmark_model --graph=mobilenet_v1_1.0_224_quant.tflite --use_nnapi=true

STARTING!

Log parameter values verbosely: [0] Graph: [mobilenet_v1_1.0_224_quant.tflite] Use NNAPI: [1] NNAPI accelerators available: [vsi-npu] Loaded model mobilenet_v1_1.0_224_quant.tflite INFO: Created TensorFlow Lite delegate for NNAPI. Explicitly applied NNAPI delegate, and the model graph will be completely executed by the delegate. The input model file size (MB): 4.27635 Initialized session in 16.746ms. Running benchmark for at least 1 iterations and at least 0.5 seconds but terminate if exceeding 150 seconds. count=17 first=305296 curr=12471 min=12299 max=305296 avg=29650 std=68911 Running benchmark for at least 50 iterations and at least 1 seconds but terminate if exceeding 150 seconds. count=81 first=12417 curr=12430 min=12294 max=12511 avg=12405.6 std=39 Inference timings in us: Init: 16746, First inference: 305296, Warmup (avg): 29650, Inference (avg): 12405.6 Note: as the benchmark tool itself affects memory footprint, the following is only APPROXIMATE to the actual memory footprint of the model at runtime. Take the information at your discretion. Peak memory footprint (MB): init=1.85938 overall=55.1406

-------------------------------------

l Verdin iMX8M Plus

label_image

-------------------------------------

root@verdin-imx8mp:/usr/bin/tensorflow-lite-2.4.0/examples# USE_GPU_INFERENCE=0 ./label_image -m mobilenet_v1_1.0_224_quant.tflite -i grace_hopper.bmp -l labels.txt -a 1 INFO: Loaded model mobilenet_v1_1.0_224_quant.tflite INFO: resolved reporter INFO: Created TensorFlow Lite delegate for NNAPI. INFO: Applied NNAPI delegate. INFO: invoked INFO: average time: 2.835 ms INFO: 0.768627: 653 military uniform INFO: 0.105882: 907 Windsor tie INFO: 0.0196078: 458 bow tie INFO: 0.0117647: 466 bulletproof vestINFO: 0.00784314: 835 suit

-------------------------------------

benchmark_model

-------------------------------------

root@verdin-imx8mp:/usr/bin/tensorflow-lite-2.4.0/examples# ./benchmark_model --graph=mobilenet_v1_1.0_224_quant.tflite --use_nnapi=true STARTING! Log parameter values verbosely: [0] Graph: [mobilenet_v1_1.0_224_quant.tflite] Use NNAPI: [1] NNAPI accelerators available: [vsi-npu] Loaded model mobilenet_v1_1.0_224_quant.tflite INFO: Created TensorFlow Lite delegate for NNAPI. Explicitly applied NNAPI delegate, and the model graph will be completely executed by the delegate. The input model file size (MB): 4.27635 Initialized session in 16.79ms. Running benchmark for at least 1 iterations and at least 0.5 seconds but terminate if exceeding 150 seconds. count=1 curr=6664535 Running benchmark for at least 50 iterations and at least 1 seconds but terminate if exceeding 150 seconds. count=367 first=2734 curr=2646 min=2624 max=2734 avg=2650.05 std=16 Inference timings in us: Init: 16790, First inference: 6664535, Warmup (avg): 6.66454e+06, Inference (avg): 2650.05 Note: as the benchmark tool itself affects memory footprint, the following is only APPROXIMATE to the actual memory footprint of the model at runtime. Take the information at your discretion. Peak memory footprint (MB): init=1.79297 overall=28.5117

-------------------------------------

Arm NN

l Apalis iMX8QM

CaffeAlexNet-Armnn

-------------------------------------

root@apalis-imx8:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeAlexNet-Armnn --data-dir=data --model-dir=models Info: ArmNN v22.0.0 Info: Initialization time: 0.14 ms Info: Network parsing time: 1397.76 ms Info: Optimization time: 195.13 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 2 with value: 0.706226 Info: Top(2) prediction is 0 with value: 1.26573e-05 Info: Total time for 1 test cases: 0.264 seconds Info: Average time per test case: 263.701 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 56.83 ms

-------------------------------------

CaffeMnist-Armnn

-------------------------------------

root@apalis-imx8:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeMnist-Armnn --data-dir=data --model-dir=models

Info: ArmNN v22.0.0 Info: Initialization time: 0.09 ms Info: Network parsing time: 8.70 ms Info: Optimization time: 2.67 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 7 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #1 Info: Top(1) prediction is 2 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #5 Info: Top(1) prediction is 1 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #8 Info: Top(1) prediction is 5 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #9 Info: Top(1) prediction is 9 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: Total time for 5 test cases: 0.015 seconds Info: Average time per test case: 2.927 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 1.56 ms

-------------------------------------

CaffeVGG-Armnn

-------------------------------------

root@apalis-imx8:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeVGG-Armnn --data-dir=data --model-dir=models

Info: ArmNN v22.0.0 Info: Initialization time: 0.08 ms Info: Network parsing time: 1452.35 ms Info: Optimization time: 491.98 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 2 with value: 0.692014 Info: Top(2) prediction is 0 with value: 9.80887e-07 Info: Total time for 1 test cases: 2.723 seconds Info: Average time per test case: 2722.846 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 115.74 ms

-------------------------------------

l Verdin iMX8M Plus

CaffeAlexNet-Armnn

-------------------------------------

root@verdin-imx8mp:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeAlexNet-Armnn --data-dir=data --model-dir=models

Info: ArmNN v22.0.0 Info: Initialization time: 0.12 ms Info: Network parsing time: 1250.55 ms Info: Optimization time: 141.40 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 2 with value: 0.706225 Info: Top(2) prediction is 0 with value: 1.26573e-05 Info: Total time for 1 test cases: 0.110 seconds Info: Average time per test case: 110.124 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 15.04 ms

-------------------------------------

CaffeMnist-Armnn

-------------------------------------

root@verdin-imx8mp:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeMnist-Armnn --data-dir=data --model-dir=models

Info: ArmNN v22.0.0 Info: Initialization time: 0.11 ms Info: Network parsing time: 8.96 ms Info: Optimization time: 3.01 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 7 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #1 Info: Top(1) prediction is 2 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #5 Info: Top(1) prediction is 1 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #8 Info: Top(1) prediction is 5 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: = Prediction values for test #9 Info: Top(1) prediction is 9 with value: 1 Info: Top(2) prediction is 0 with value: 0 Info: Total time for 5 test cases: 0.008 seconds Info: Average time per test case: 1.608 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 1.69 ms

-------------------------------------

CaffeVGG-Armnn

-------------------------------------

root@verdin-imx8mp:/usr/bin/armnn-20.08/ArmnnTests# ../CaffeVGG-Armnn --data-dir=data --model-dir=modelsInfo: ArmNN v22.0.0

Info: Initialization time: 0.15 ms Info: Network parsing time: 2842.95 ms Info: Optimization time: 316.74 ms Info: = Prediction values for test #0 Info: Top(1) prediction is 2 with value: 0.692015 Info: Top(2) prediction is 0 with value: 9.8088e-07 Info: Total time for 1 test cases: 1.098 seconds Info: Average time per test case: 1097.593 ms Info: Overall accuracy: 1.000 Info: Shutdown time: 130.65 ms

-------------------------------------

ONNX

l Apalis iMX8QM

onnx_test_runner

-------------------------------------

root@apalis-imx8:~# time onnx_test_runner -j 1 -c 1 -r 1 -e vsi_npu ./mobilenetv2-7/

result: Models: 1 Total test cases: 3 Succeeded: 3 Not implemented: 0 Failed: 0 Stats by Operator type: Not implemented(0): Failed: Failed Test Cases: real 0m0.643s user 0m1.513s sys 0m0.111s

-------------------------------------

l Verdin iMX8M Plus

onnx_test_runner

-------------------------------------

root@verdin-imx8mp:~# time onnx_test_runner -j 1 -c 1 -r 1 -e vsi_npu ./mobilenetv2-7/

result: Models: 1 Total test cases: 3 Succeeded: 3 Not implemented: 0 Failed: 0 Stats by Operator type: Not implemented(0): Failed: Failed Test Cases: real 0m0.663s user 0m1.195s sys 0m0.073s

-------------------------------------

PyTorch

l Apalis iMX8QM

pytorch_mobilenetv2.py

-------------------------------------

root@apalis-imx8:/usr/bin/pytorch/examples# time python3 pytorch_mobilenetv2.py

('tabby, tabby cat', 46.348018646240234) ('tiger cat', 35.17843246459961) ('Egyptian cat', 15.802857398986816) ('lynx, catamount', 1.161122441291809) ('tiger, Panthera tigris', 0.20774582028388977) real 0m8.806s user 0m7.440s sys 0m0.593s

-------------------------------------

l Verdin iMX8M Plus

pytorch_mobilenetv2.py

-------------------------------------

root@verdin-imx8mp:/usr/bin/pytorch/examples# time python3 pytorch_mobilenetv2.py

('tabby, tabby cat', 46.348018646240234) ('tiger cat', 35.17843246459961) ('Egyptian cat', 15.802857398986816) ('lynx, catamount', 1.161122441291809) ('tiger, Panthera tigris', 0.20774582028388977) real 0m6.313s user 0m5.933s sys 0m0.295s

-------------------------------------

汇总对比

根据具体测试应用不同,两者之间的性能差距大小不一。总体来看常用机器学习算法在 Verdin iMX8M Plus 的 NPU 上的表现会优于 Apalis iMX8QM 的 GPU。

总结

机器学习是较为复杂的应用,除了硬件处理器外,影响算法性能表现的还包括对模型本身的优化。尤其是对嵌入式系统有限的处理能力来讲,直接将 PC 上现成的模型拿过来用通常会表现不佳。同时根据项目需求选择合适计算机模块,毕竟 Verdin iMX8M Plus 和 Apalis iMX8QM 的用途侧重点不同。

提交

Verdin AM62 LVGL 移植

基于 NXP iMX8MM 测试 Secure Boot 功能

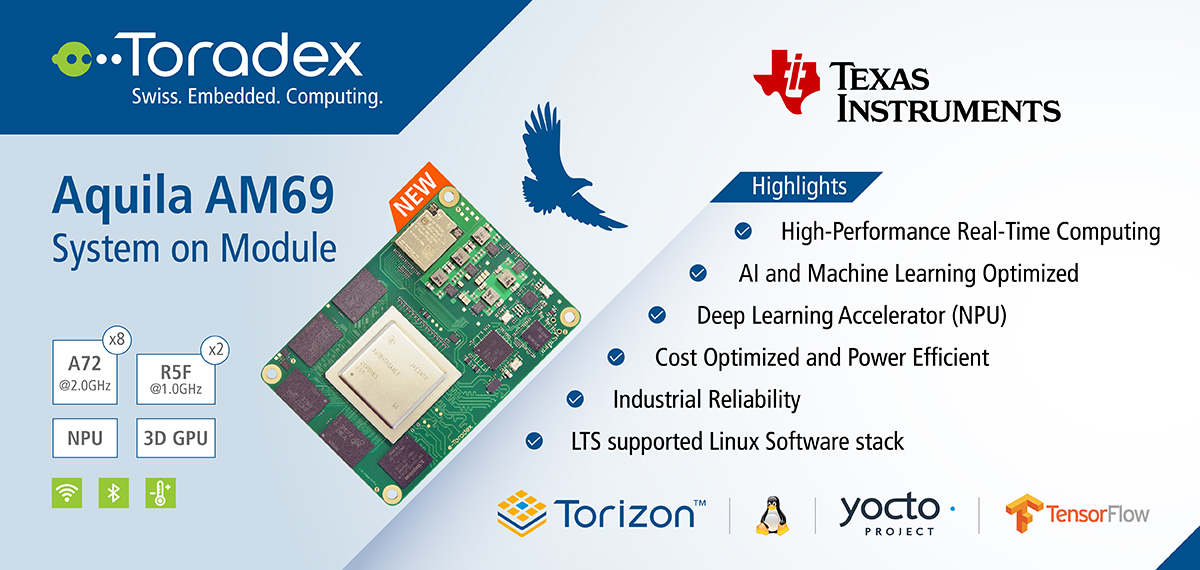

隆重推出 Aquila - 新一代 Toradex 计算机模块

Verdin iMX8MP 调试串口更改

NXP iMX8MM Cortex-M4 核心 GPT Capture 测试

投诉建议

投诉建议